Tag: mesh-based architectures

A Computational RAM (C-RAM) Architecture for Real-Time Mesh-Based Video Motion Tracking: Part II Motion Compensation

This paper presents a new Computational-RAM (C-RAM) architecture for real-time mesh-based video motion tracking. In Part 1, the motion estimation part of the proposed architecture is presented. Here in Part 2, a new C-RAM mesh-based motion compensation architecture is presented. The input data to the architecture is the mesh nodes motion vectors and the reference frame and the output data is the compensated (i.e., predicted) frame. The architecture uses the affine transformation for warping the deformed patches in the reference frame into the undeformed patches in the current frame. The architecture computes the affine parameters using a multiplication-free algorithm. The reference and current frames are stored in embedded S-RAMs generated with Virage™ Memory Compiler. The proposed motion compensation architecture has been prototyped, simulated and synthesized using the TSMC 0.18 μm CMOS technology. Using 100 MHz clock frequency, the proposed architecture processes one CIF video frame (i.e., 352×288 pixels) in 0.59 ms, which means it can process up to 1694 frames per second. The core area of the proposed motion compensation architecture is 28.04 mm2 and it consumes 31.15 mW.

Mohammed Sayed and Wael Badawy, “A Computational RAM (C-RAM) Architecture for Real-Time Mesh-Based Video Motion Tracking: Part II Motion Compensation,” Journal of Circuits, Systems and Computer, Vol. 13, Issue 6, December 2004, pp. 1217-1232.

A Computational RAM (C-RAM) Architecture for Real-Time Mesh-Based Video Motion Tracking: Part I Motion Estimation,

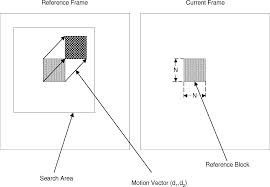

This paper presents a new Computational-RAM (C-RAM) architecture for real-time mesh-based video motion tracking. The motion tracking consists of two operations: mesh-based motion estimation and compensation. The proposed motion estimation architecture is presented in Part 1 and the proposed motion compensation architecture is presented in Part 2. The motion estimation architecture stores two frames and computes motion vectors for a regular triangular mesh structure as defined by MPEG-4 Part 2.1 The motion estimation architecture uses the block-matching algorithm (BMA) to estimate the vertical and horizontal motion vectors for each mesh node. Parallel and pipelined implementations have been used to overcome the huge computational requirements of the motion estimation process. The two frames are stored in embedded S-RAMs generated with Virage™ Memory Compiler. The proposed motion estimation architecture has been prototyped, simulated and synthesized using the TSMC 0.18 μm CMOS technology. At 100 MHz clock frequency, the proposed architecture processes one CIF video frame (i.e., 352×288 pixels) in 1.48 ms, which means it can process up to 675 frames per second. The core area of the proposed motion estimation architecture is 24.58 mm2 and it consumes 46.26 mW.

Read More: https://www.worldscientific.com/doi/abs/10.1142/S0218126604001921

Mohammed Sayed and Wael Badawy, “A Computational RAM (C-RAM) Architecture for Real-Time Mesh-Based Video Motion Tracking: Part I Motion Estimation,” Journal of Circuits, Systems and Computers, Vol. 13, Issue 6, December 2004, pp. 1203-1216.